0 Comments

(1700 words; 10 minute read. This post was inspired by a suggestion from Chapter 2 (p. 60) of Jess Whittlestone's dissertation on confirmation bias.) Uh oh. No family reunion this spring—but still, Uncle Ron managed to get you on the phone for a chat. It started pleasantly enough, but now the topic has moved to politics.

He says he’s worried that Biden isn’t actually running things as president, and that instead more radical folks are running the show. He points to some video anomalies (“hand floating over mic”) that led to the theory that many apparent videos of Biden are fake. You point out that the basis for that theory has been debunked—the relevant anomaly was due to a video compression error, and several other videos taken from the same angle show the same scene. He reluctantly seems to accept this. The conversation moves on. But a few days later, you see him posting on social media about how that same video of Biden may have been fake! Sigh. Didn’t you just correct that mistake? Why do people cling on to their beliefs even after they’ve been debunked? This is the problem; another instance of people’s irrationality leading to our polarized politics—right? Well, it is a problem. But it may be all the thornier because it’s often rational. Apologies for dropping off the map there—turns out moving and starting a new job in the midst of a pandemic takes up some bandwidth!

Now that I've found my feet a bit, I just wanted to post a few quick blog updates:

More coming soon! (1400 words; 7 minute read.) The most important factor that drove Becca and me apart, politically, is that we went our separate ways, socially. I went to a liberal university in a city; she went to a conservative college in the country. I made mostly-liberal friends and listened to mostly-liberal professors; she made mostly-conservative friends and listened to mostly-conservative ones.

As we did so, our opinions underwent a large-scale version of the group polarization effect: the tendency for group discussions amongst like-minded individuals to lead to opinions that are more homogenous and more extreme in the same direction as their initial inclination. The predominant force that drives the group polarization effect is simple: discussion amongst like-minded individuals involves sharing like-minded arguments. (For more on the effect of social influence, see this post.) Spending time with liberals, I was exposed to a predominance of liberal arguments; as a result, I become more liberal. Vice versa for Becca. Stated that way, this effect can seem commonsensical: of course it’s reasonable for people in such groups to polarize. For example: I see more arguments in favor of gun control; Becca sees more arguments against them. So there’s nothing puzzling about the fact that, years later, we have wound up with radically different opinions about gun control. Right? Not so fast. (1700 words; 8 minute read.) It’s September 21, 2020. Justice Ruth Bader Ginsburg has just died. Republicans are moving to fill her seat; Democrats are crying foul. Fox News publishes an op-ed by Ted Cruz arguing that the Senate has a duty to fill her seat before the election. The New York Times publishes an op-ed on Republicans’ hypocrisy and Democrats’ options. Becca and I each read both. I—along with my liberal friends—conclude that Republicans are hypocritically and dangerous violating precedent. Becca—along with her conservative friends—concludes that Republicans are doing what needs to be done, and that Democrats are threatening to violate democratic norms (“court packing??”) in response. In short: we both see the same evidence, but we react in opposite ways—ways that lead each of us to be confident in our opposing beliefs. In doing so, we exhibit a well-known form of confirmation bias. And we are rational to do so: we both are doing what we should expect will make our beliefs most accurate. Here’s why. Reasonably Polarized will be back next week. In the meantime, here's a guest post on the rationality of framing effects, by Sarah Fisher (University of Reading), based on a forthcoming paper of hers that asks whether the "at least" reading of number terms can yield a rational explanation of framing effects. The paper recently won Crítica's essay competition on the theme of empirically informed philosophy—congrats Sarah! 2300 words; 10 minute read. As we learn to live in the ‘new normal’, amidst the easing and tightening of local and national lockdowns, day-to-day decision-making has become fraught with difficulty. Here are some of the questions I’ve been grappling with lately:

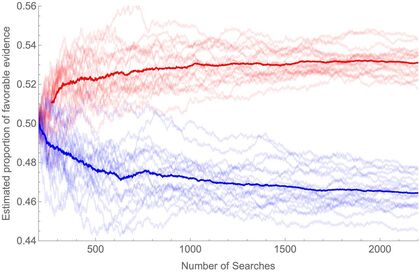

(2000 words; 9 minute read.) So far, I’ve laid the foundations for a story of rational polarization. I’ve argued that we have reason to explain polarization through rational mechanisms; showed that ambiguous evidence is necessary to do so; and described an experiment illustrating this possibility.

Today, I’ll conclude the core theoretical argument. I'll give an ambiguous-evidence model of our experiment that both (1) explains the predictable polarization it induces, and (2) shows that such polarization can in principle be profound (both sides end up disagreeing massively) and persistent (neither side is changes their opinion when they discover that they disagree). With this final piece of the theory in place, we’ll be able to apply it to the empirical mechanisms that drive polarization, and see how the polarizing effects of persuasion, confirmation bias, motivated reasoning, and so on, can all be rationalized by ambiguous evidence. Recall our polarization experiment. (2300 words, 12 minute read.)

So far, I've (1) argued that we need a rational explanation of polarization, (2) described an experiment showing how in principle we could give one, and (3) suggested that this explanation can be applied to the psychological mechanisms that drive polarization. Over the next two weeks, I'll put these normative claims on a firm theoretical foundation. Today I'll explain why ambiguous evidence is both necessary and sufficient for predictable polarization to be rational. Next week I'll use this theory to explain our experimental results and show how predictable, profound, persistent polarization can emerge from rational processes. With those theoretical tools in place, we'll be in a position to use them to explain the psychological mechanisms that in fact drive polarization. So: what do I mean by "rational" polarization; and why is "ambiguous" evidence the key? It’s standard to distinguish practical from epistemic rationality. Practical rationality is doing the best that you can to fulfill your goals, given the options available to you. Epistemic rationality is doing the best that you can to believe the truth, given the evidence available to you. It’s practically rational to believe that climate change is a hoax if you know that doing otherwise will lead you to be ostracized by your friends and family. It’s not epistemically rational to do so unless your evidence—including the opinions of those you trust—makes it likely that climate change is a hoax. My claim is about epistemic rationality, not practical rationality. Given how important our political beliefs are to our social identities, it’s not surprising that it’s in our interest to have liberal beliefs if our friends are liberal, and to have conservative beliefs if our friends are conservative. Thus is should be uncontroversial that the mechanisms that drive polarization can be practically rational—as people like Ezra Klein and Dan Kahan claim. The more surprising claim I want to defend is that ambiguities in political evidence make it so that liberals and conservatives who are doing the best they can to believe the truth will tend to become more confident in their opposing beliefs. To defend this claim, we need concrete theory of epistemic rationality. (2200 words; 10 min read)

When Becca and I left our home town in central Missouri 10 years ago, I made my way to a liberal university in the city, while she made her way to a conservative college in the country. As I’ve said before, part of what’s fascinating about stories like ours is that we could predict that we’d become polarized as a result: that I’d become more liberal; she, more conservative. But what, exactly, does that mean? In what sense have Americans polarized—and in what sense is the predictability of this polarization new? That’s a huge question. Here’s the short of it. (1700 words; 8 minute read)

The core claim of this series is that political polarization is caused by individuals responding rationally to ambiguous evidence. To begin, we need a possibility proof: a demonstration of how ambiguous evidence can drive apart those who are trying to get to the truth. That’s what I’m going to do today. I’m going to polarize you, my rational readers. (1700 words; 8 minute read.) [9/4/21 update: if you'd like to see the rigorous version of this whole blog series, check out the paper on "Rational Polarization" I just posted.] A Standard Story

I haven’t seen Becca in a decade. I don’t know what she thinks about Trump, or Medicare for All, or defunding the police. But I can guess. Becca and I grew up in a small Midwestern town. Cows, cornfields, and college football. Both of us were moderate in our politics; she a touch more conservative than I—but it hardly mattered, and we hardly noticed. After graduation, we went our separate ways. I, to a liberal university in a Midwestern city, and then to graduate school on the East Coast. She, to a conservative community college, and then to settle down in rural Missouri. I––of course––became increasingly liberal. I came to believe that gender roles are oppressive, that racism is systemic, and that our national myths let the powerful paper over the past. And Becca? This is a guest post by Brian Hedden (University of Sydney). (3000 words; 14 minute read) Predictive and decision-making algorithms are playing an increasingly prominent role in our lives. They help determine what ads we see on social media, where police are deployed, who will be given a loan or a job, and whether someone will be released on bail or granted parole. Part of this is due to the recent rise of machine learning. But some algorithms are relatively simple and don’t involve any AI or ‘deep learning.’ As algorithms enter into more and more spheres of our lives, scholars and activists have become increasingly interested in whether they might be biased in problematic ways. The algorithms behind some facial recognition software are less accurate for women and African Americans. Women are less likely than men to be shown an ad relating to high-paying jobs on Google. Google Translate translated neutral non-English pronouns into masculine English pronouns in sentences about stereotypically male professions (e.g., ‘he is a doctor’). When Alexandria Ocasio-Cortez noted the possibility of algorithms being biased (e.g., in virtue of encoding biases found in their programmers, or the data on which they are trained), Ryan Saavedra, a writer for the conservative Daily Wire, mocked her on Twitter, writing “Socialist Rep. Alexandria Ocasio-Cortez claims that algorithms, which are driven by math, are racist.” I think AOC was clearly right and Saavedra clearly wrong. It’s true that algorithms do not have inner feelings of prejudice, but that doesn’t mean they cannot be racist or biased in other ways. But in any particular case, it’s tricky to determine whether a given algorithm is in fact biased or unfair. This is largely due to the lack of agreed-upon criteria of algorithmic fairness.

(This post is co-written with Matt Mandelkern, based on our joint paper on the topic. 2500 words; 12 minute read.)

It’s February 2024. Three Republicans are vying for the Presidential nomination, and FiveThirtyEight puts their chances at:

Some natural answers: "Pence"; "Pence or Carlson"; "Pence, Carlson, or Haley". In a Twitter poll earlier this week, the first two took up a majority (53.4%) of responses:

But wait! If you answered "Pence", or "Pence or Carlson", did you commit the conjunction fallacy? This is the tendency to say that narrower hypotheses are more likely than broader ones––such as saying that P&Q is more likely than Q—contrary to the laws of probability. Since every way in which "Pence" or "Pence or Carlson" could be true is also a way in which “Pence, Carlson, or Haley” would be true, the third option is guaranteed to be more likely than each of the first two.

Does this mean answering our question with “Pence” or “Pence or Carlson” was a mistake? We don’t think so. We think what you were doing was guessing. Rather than simply ranking answers for probability, you were making a tradeoff between being accurate (saying something probable) and being informative (saying something specific). In light of this tradeoff, it’s perfectly permissible to guess an answer (“Pence”) that’s less probable––but more informative––than an alternative (“Pence, Carlson, or Haley”). Here we'll argue that this explains––and partially rationalizes––the conjunction fallacy. (This is a guest post by Jake Quilty-Dunn, replying to my reply to his original post on on rationalization and (ir)rationality. 1000 words; 5 minute read.) Thanks very much to Kevin for inviting me to defend my comparatively gloomy picture of human nature on this blog, and for continuing the conversation with his thoughtful reply.

(1000 words; 5 minute read.)

Here are a few thoughts I had after reading Jake Quilty-Dunn’s excellent guest post, in which he makes the case for the irrationality of rationalization (thanks Jake!). I’ll jump right in, so make sure you take a look at his piece before reading this one. This is a guest post from Jake Quilty-Dunn (Oxford / Washington University), who has an interestingly different take on the question of rationality than I do. This post is based on a larger project; check out the full paper here. (2500 words; 12 minute read.) Is it really possible that people tend to be rational? On the one hand, Kevin and others who favor “rational analysis” (including Anderson, Marr, and leading figures in the recent surge of Bayesian cognitive science) have made the theoretical point that the mind evolved to solve problems. Looking at how well it solves those problems—in perception, motor control, language acquisition, sentence parsing, and elsewhere—it’s hard not to be impressed. You might then extrapolate to the acquisition and updating of beliefs and suppose those processes ought to be optimal as well. On the other hand, many of us would like simply to point to our experience with other human beings (and, in moments of brutal honesty, with ourselves). That experience seems on its face to reveal a litany of biases and irrational reactions to circumstances, generating not only petty personal problems but even larger social ills.

(2900 words; 15 minute read.)

[5/11 Update: Since the initial post, I've gotten a ton of extremely helpful feedback (thanks everyone!). In light of some of those discussions I've gone back and added a little bit of material. You can find it by skimming for the purple text.]

[5/28 Update: If I rewrote this now, I'd now reframe the thesis as: "Either the gambler's fallacy is rational, or it's much less common than it's often taken to be––and in particular, standard examples used to illustrate it don't do so."] A title like that calls for some hedges––here are two. First, this is work in progress: the conclusions are tentative (and feedback is welcome!). Second, all I'll show is that rational people would often exhibit this "fallacy"––it's a further question whether real people who actually commit it are being rational. Off to it. On my computer, I have a bit of code call a "koin". Like a coin, whenever a koin is "flipped" it comes up either heads or tails. I'm not going to tell you anything about how it works, but the one thing everyone should know about koins is the same thing that everyone knows about coins: they tend to land heads around half the time. I just tossed the koin a few times. Here's the sequence it's landed in so far:

T H T T T T T

How likely do you think it is to land heads on the next toss? You might look at that sequence and be tempted to think a heads is "due", i.e. that it's more than 50% likely to land heads on the next toss. After all, koins usually land heads around half the time––so there seems to be an overly long streak of tails occurring. But wait! If you think that, you're committing the gambler's fallacy: the tendency to think that if an event has recently happened more frequently than normal, it's less likely to happen in the future. That's irrational. Right? Wrong. Given your evidence about koins, you should be more than 50% confident that the next toss will land heads; thinking otherwise would be a mistake. (This is a guest post by Brian Hedden. 2400 words; 10 minute read.)

It’s now part of conventional wisdom that people are irrational in systematic and predictable ways. Research purporting to demonstrate this has resulted in at least 2 Nobel Prizes and a number of best-selling books. It’s also revolutionized economics and the law, with potentially significant implications for public policy. Recently, some scholars have begun pushing back against this dominant irrationalist narrative. Much of the pushback has come from philosophers, and it has come by way of questioning the normative models of rationality assumed by the irrationalist economists and psychologists. Tom Kelly has argued that sometimes, preferences that appear to constitute committing the sunk cost fallacy should perhaps really be regarded as perfectly rational preferences concerning the narrative arc of one’s life and projects. Jacob Nebel has argued that status quo bias can sometimes amount to a perfectly justifiable conservatism about value. And Kevin Dorst has argued that polarization and the overconfidence effect might be perfectly rational responses to ambiguous evidence. In this post, I’ll explain my own work pushing back against the conclusion that humans are predictably irrational in virtue of displaying so-called hindsight bias. Hindsight bias is the phenomenon whereby knowing that some event actually occurred leads you to give a higher estimate of the degree to which that event’s occurrence was supported by the evidence available beforehand. I argue that not only is hindsight bias often not irrational; sometimes it’s even rationally required, and so failure to display hindsight bias would be irrational. (This is a guest post by Sarah Fisher. 2000 words; 8 minute read.) We could all do with imagining ourselves into a different situation right now. For me, it would probably be a sunny café, with a coffee and a delicious pastry in front of me––bliss. Here’s another scenario that seems ever more improbable as time goes by (remember when we played and watched sports…?!):

(2500 words; 11 minute read.)

Last week I had back-to-back phone calls with two friends in the US. The first told me he wasn’t too worried about Covid-19 because the flu already is a pandemic, and although this is worse, it’s not that much worse. The second was—as he put it—at “DEFCON 1”: preparing for the possibility of a societal breakdown, and wondering whether he should buy a gun. I bet this sort of thing sounds familiar. People have had very different reactions to the pandemic. Why? And equally importantly: what are we to make of such differences? The question is political. Though things are changing fast, there remains a substantial partisan divide in people’s reactions: for example, one poll from early this week found that 76% of Democrats saw Covid as a “real threat”, compared to only 40% of Republicans (continuing the previous week’s trend). What are we to make of this “pandemic polarization”? Must Democrats attribute partisan-motivated complacency to Republicans, or Republicans attribute partisan-motivated panic to Democrats? I’m going to make the case that the answer is no: there is a simple, rational process that can drive these effects. Therefore we needn’t—I’d say shouldn’t—take the differing reaction of the “other side” as yet another reason to demonize them. I just published a new piece in the Oxonian Review. It argues that a general problem with claimed demonstrations of irrationality is their reliance on standard economic models of rational belief and action, and illustrates the point by explaining some great work by Tom Kelly on the sunk cost fallacy and by Brian Hedden on hindsight bias.

Check out the full article here.

2400 words; 10 minute read.

I bet you’re underestimating yourself. Humor me with a simple exercise. When I say so, close your eyes, turn around, and flicker them open for just a fraction of the second. Note the two most important objects you see, along with their relative positions. Ready? Go. I bet you succeeded. Why is that interesting? Because the “simple” exercise you just performed requires solving a maddeningly difficult computational problem. And the fact that you solved it bears on the question of how rational the human mind is. 2400 words; 10 minute read.

The Problem We all know that people now disagree over political issues more strongly and more extensively than any time in recent memory. And—we are told—that is why politics is broken: polarization is the political problem of our age. Is it? 2400 words; 10 minute read.

A Mistake Do people tend to be overconfident? Let’s find out. For each question, select your answer, and then rate your confidence in that answer on a 50–100% scale:

|

Kevin DorstPhilosopher at MIT, trying to convince people that their opponents are more reasonable than they think Quick links:

- What this blog is about - Reasonably Polarized series - RP Technical Appendix Follow me on Twitter or join the newsletter for updates. Archives

June 2023

Categories

All

|

RSS Feed

RSS Feed