|

(1000 words; 5 minute read.)

Here are a few thoughts I had after reading Jake Quilty-Dunn’s excellent guest post, in which he makes the case for the irrationality of rationalization (thanks Jake!). I’ll jump right in, so make sure you take a look at his piece before reading this one.

I very much like Jake’s distinction between merely positing an input-output function to explain (ir)rational behavior, vs. positing a particular causal process underlying it. It seems absolutely right to me that this is a strategy that has the potential to make progress on debates over (ir)rationality. And I definitely agree that the evidence he presents raises some challenges for a rational picture of rationalization!

But I want to raise a couple questions to probe how severe those challenges are. As I understand it, there are two main pieces of evidence in favor of the (irrational) dissonance-reduction mechanism: (1) people’s tendency to rationalize is mediated by negative affect (the desire to avoid an unpleasant feeling), and (2) the way they rationalize tends to be closely linked to maintaining stable motivation. My questions: Should (1) be surprising on a rational retelling of the narrative? And how strong is the evidence for (2)? To (1): if rationalization were generally a result of rational revisions of beliefs, would it be surprising that this process is mediated through discomfort? In general, processes that take cognitive effort require some motivational push to get people to do them. Given that, why not think that the negative affect associated with dissonance is the mind’s signal to itself, ``Hey––figure this out!” I think a similar feeling will be familiar to lots of people—especially those who’ve done much math, coding, or logic. There’s a distinctively exciting––but also agonizing––feeling of being stuck on a proof or problem; a strong discomfort with not knowing how to figure out the answer, which can be quite motivating. More generally, I suspect curiosity is often driven by some form of negative affect. For an example, consider this riddle: On Saturday, Becca starts climbing a mountain at 9am, gets to the top at 6pm, and spends the night there. On Sunday, she starts walking down (on the same path she came up) at 9am, and gets to the bottom at 6pm. Question: is there a time of day t such that, at t, she was at exactly the same location on both Saturday and Sunday? (You don't have to say what the time is; just whether there is guaranteed to be such a time.)

Think about it. I’m not going to tell you the answer (yet). But I’ll tell you that the answer is neat––most people have an “Aha!” moment when they figure out. So keep thinking it over…

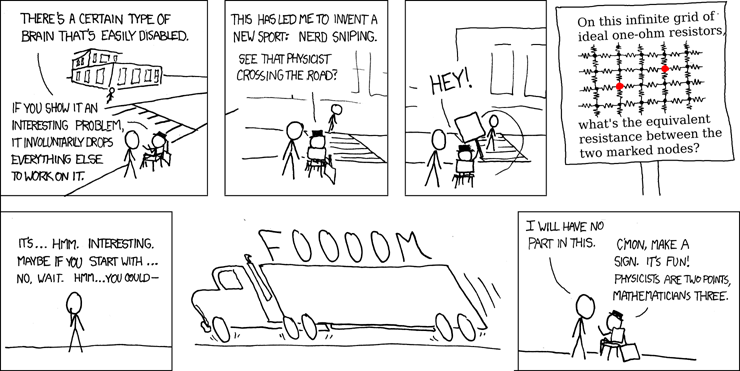

As you do so, reflect on how it feels to think it over and not know the answer. A bit uncomfortable, maybe even frustrating, isn’t it? Curiosity––the scientists’ famous, “Hm, that’s funny…”––can be quite the motivator! I appeal to authority:

The point: I wonder how surprising it would be, on a rational picture, that rationalization is mediated by a negative feeling. Might that feeling be your mind’s signal to itself to figure something out––be it a riddle about a backpacker, or a tension in your web of beliefs? This would predict, for instance, that giving people a sugar pill and telling them it’ll make them uncomfortable would lead to fewer people solving riddles like the one above. I have no idea if that’s right! But it seems like a not-bonkers idea to test. (Okay okay, the answer to the riddle: imagine that Sati starts walking up the mountain, and Sunny starts walking down it, at 9am on the same day. Will there be a time t at which they are both at the same location?)

Turn to (2): the idea that rationalization is geared toward maintaining motivation. I’m wondering about how strong the evidence is for this claim; here are two specific questions.

First: can the dissonance studies be replicated third-personally? Meet Jim. He’s nice, works for a paper company, has a happy marriage, and volunteers with a senior-services organization on the weekends. Jim just participated in an experiment. He sat in a room and adjusted a knob back and forth, following a randomly moving dot, for 20 minutes. The experimenter then asked him if he’d be wiling to tell another subject sitting outside that the task was fun, and offered him 1 dollar to do so. Jim did so. Question: what’s your estimate for how fun the knob-adjusting activity was, on a scale of 1–10? You see the parallel, of course. My guess is that if we ran both this type of question and a variant on which the experimenter offered Jim 100 dollars, people’s estimate of how fun the activity was would be higher in the 1-dollar than the 100-dollar condition. I haven’t found any studies like this (Jake, do you know of any?), but let’s suppose that’s right. In this case, it seems relatively clear that the explanation would go through the fact that you think Jim is a nice person. A nice person saying that an experiment was fun is more evidence that it’s fun if he had little incentive to do so, than it is if he had a lot of incentive to do so. In other words: it seems like the motivation-maintenance explanation would have trouble explaining third-personal cases like this, where your own self-image isn’t at issue. Of course, if there’s a big asymmetry between these types of cases, that’s a good prediction of the dissonance theory! But I’m a bit suspicious about such an asymmetry. Final question: if the purpose of rationalization really is to maintain motivation, why––as Jake says––does dissonance reduction lead people to prefer consistent but negative self-appraisals? Why not instead create a (perhaps inconsistent but) rosy view of yourself, period? In other words, why does consistency play the role it does in cognitive-dissonance effects? Jake has plenty of things to say about this! So this is in part just an invitation for him to say more. But I think it’s worth flagging that the role of consistency in cognitive dissonance is straightforwardly predicted by (epistemically) rational accounts of it––consistency is a means of getting to the truth––but that irrationalist accounts need to say something further to explain it. What next? Check our Jake's reply, which (among other things) discusses some interesting experiments bearing on my empirical questions.

1 Comment

|

Kevin DorstPhilosopher at MIT, trying to convince people that their opponents are more reasonable than they think Quick links:

- What this blog is about - Reasonably Polarized series - RP Technical Appendix Follow me on Twitter or join the newsletter for updates. Archives

June 2023

Categories

All

|

RSS Feed

RSS Feed