|

(1700 words; 10 minute read. This post was inspired by a suggestion from Chapter 2 (p. 60) of Jess Whittlestone's dissertation on confirmation bias.) Uh oh. No family reunion this spring—but still, Uncle Ron managed to get you on the phone for a chat. It started pleasantly enough, but now the topic has moved to politics.

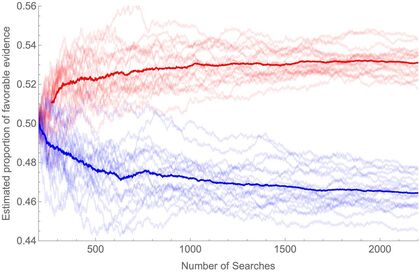

He says he’s worried that Biden isn’t actually running things as president, and that instead more radical folks are running the show. He points to some video anomalies (“hand floating over mic”) that led to the theory that many apparent videos of Biden are fake. You point out that the basis for that theory has been debunked—the relevant anomaly was due to a video compression error, and several other videos taken from the same angle show the same scene. He reluctantly seems to accept this. The conversation moves on. But a few days later, you see him posting on social media about how that same video of Biden may have been fake! Sigh. Didn’t you just correct that mistake? Why do people cling on to their beliefs even after they’ve been debunked? This is the problem; another instance of people’s irrationality leading to our polarized politics—right? Well, it is a problem. But it may be all the thornier because it’s often rational.

12 Comments

(1700 words; 8 minute read.) It’s September 21, 2020. Justice Ruth Bader Ginsburg has just died. Republicans are moving to fill her seat; Democrats are crying foul. Fox News publishes an op-ed by Ted Cruz arguing that the Senate has a duty to fill her seat before the election. The New York Times publishes an op-ed on Republicans’ hypocrisy and Democrats’ options. Becca and I each read both. I—along with my liberal friends—conclude that Republicans are hypocritically and dangerous violating precedent. Becca—along with her conservative friends—concludes that Republicans are doing what needs to be done, and that Democrats are threatening to violate democratic norms (“court packing??”) in response. In short: we both see the same evidence, but we react in opposite ways—ways that lead each of us to be confident in our opposing beliefs. In doing so, we exhibit a well-known form of confirmation bias. And we are rational to do so: we both are doing what we should expect will make our beliefs most accurate. Here’s why. (1700 words; 8 minute read)

The core claim of this series is that political polarization is caused by individuals responding rationally to ambiguous evidence. To begin, we need a possibility proof: a demonstration of how ambiguous evidence can drive apart those who are trying to get to the truth. That’s what I’m going to do today. I’m going to polarize you, my rational readers. (1700 words; 8 minute read.) [9/4/21 update: if you'd like to see the rigorous version of this whole blog series, check out the paper on "Rational Polarization" I just posted.] A Standard Story

I haven’t seen Becca in a decade. I don’t know what she thinks about Trump, or Medicare for All, or defunding the police. But I can guess. Becca and I grew up in a small Midwestern town. Cows, cornfields, and college football. Both of us were moderate in our politics; she a touch more conservative than I—but it hardly mattered, and we hardly noticed. After graduation, we went our separate ways. I, to a liberal university in a Midwestern city, and then to graduate school on the East Coast. She, to a conservative community college, and then to settle down in rural Missouri. I––of course––became increasingly liberal. I came to believe that gender roles are oppressive, that racism is systemic, and that our national myths let the powerful paper over the past. And Becca?

(This post is co-written with Matt Mandelkern, based on our joint paper on the topic. 2500 words; 12 minute read.)

It’s February 2024. Three Republicans are vying for the Presidential nomination, and FiveThirtyEight puts their chances at:

Some natural answers: "Pence"; "Pence or Carlson"; "Pence, Carlson, or Haley". In a Twitter poll earlier this week, the first two took up a majority (53.4%) of responses:

But wait! If you answered "Pence", or "Pence or Carlson", did you commit the conjunction fallacy? This is the tendency to say that narrower hypotheses are more likely than broader ones––such as saying that P&Q is more likely than Q—contrary to the laws of probability. Since every way in which "Pence" or "Pence or Carlson" could be true is also a way in which “Pence, Carlson, or Haley” would be true, the third option is guaranteed to be more likely than each of the first two.

Does this mean answering our question with “Pence” or “Pence or Carlson” was a mistake? We don’t think so. We think what you were doing was guessing. Rather than simply ranking answers for probability, you were making a tradeoff between being accurate (saying something probable) and being informative (saying something specific). In light of this tradeoff, it’s perfectly permissible to guess an answer (“Pence”) that’s less probable––but more informative––than an alternative (“Pence, Carlson, or Haley”). Here we'll argue that this explains––and partially rationalizes––the conjunction fallacy.

(2900 words; 15 minute read.)

[5/11 Update: Since the initial post, I've gotten a ton of extremely helpful feedback (thanks everyone!). In light of some of those discussions I've gone back and added a little bit of material. You can find it by skimming for the purple text.]

[5/28 Update: If I rewrote this now, I'd now reframe the thesis as: "Either the gambler's fallacy is rational, or it's much less common than it's often taken to be––and in particular, standard examples used to illustrate it don't do so."] A title like that calls for some hedges––here are two. First, this is work in progress: the conclusions are tentative (and feedback is welcome!). Second, all I'll show is that rational people would often exhibit this "fallacy"––it's a further question whether real people who actually commit it are being rational. Off to it. On my computer, I have a bit of code call a "koin". Like a coin, whenever a koin is "flipped" it comes up either heads or tails. I'm not going to tell you anything about how it works, but the one thing everyone should know about koins is the same thing that everyone knows about coins: they tend to land heads around half the time. I just tossed the koin a few times. Here's the sequence it's landed in so far:

T H T T T T T

How likely do you think it is to land heads on the next toss? You might look at that sequence and be tempted to think a heads is "due", i.e. that it's more than 50% likely to land heads on the next toss. After all, koins usually land heads around half the time––so there seems to be an overly long streak of tails occurring. But wait! If you think that, you're committing the gambler's fallacy: the tendency to think that if an event has recently happened more frequently than normal, it's less likely to happen in the future. That's irrational. Right? Wrong. Given your evidence about koins, you should be more than 50% confident that the next toss will land heads; thinking otherwise would be a mistake. 2400 words; 10 minute read.

The Problem We all know that people now disagree over political issues more strongly and more extensively than any time in recent memory. And—we are told—that is why politics is broken: polarization is the political problem of our age. Is it? |

Kevin DorstPhilosopher at MIT, trying to convince people that their opponents are more reasonable than they think Quick links:

- What this blog is about - Reasonably Polarized series - RP Technical Appendix Follow me on Twitter or join the newsletter for updates. Archives

June 2023

Categories

All

|

RSS Feed

RSS Feed